Lost at Sea

CS348B Final Project

Dmitry Belogolovsky & Woodley Packard

Our presentation can be found here.

Our short movies can be found here and here.

Our goal was to render an electric storm striking an ocean. We both took several years of ancient Greek in high school, so we decided to theme the picture along those lines; they scene is supposed to represent a Greek ship lost on its way to battle. Here are a couple of lightning photographs that we found inspirational while working on the project:

|

|

The image consists of several fairly independent components:

|

|

| Flat Texture | Pure Volume |

|

|

| Metaballs Volume | Heightfield |

We decided to go with the final approach of 3D-photon mapping [1]. The water reflects the sky, and so finding a physically-accurate model for the sky required a lot of attention. Although some of the other methods looked pretty good, volume rendering the 3D photon map added the most realism to the scene. The density function for the sky is 3D Perlin noise.

We traced 10 million photons through the volume of the clouds to generate a volumetric photon map. For space-efficiency and access time, we stored the resulting data as a 3D texture map. This optimization circumvented the costly search operation.

Next, we used Peter Williams' "Volume Density Optical Model" [2] to render the photon map as a luminous volume. The photon map saved us the work of recursively tracing shadow and scatter rays from our primary ray-march, converting the exponential general-case volume rendering integration into a linear time algorithm.

|

|

| A slice of the 3D photon map | The resulting image |

The ocean is raytraced using Musgrave's QAEB-tracing algorithm [5]. Precise procedural normals are available from the underlying analytically defined surface. Reflection rays are generated upon collision, and weighted by a function of their incident angle. We did not trace any rays underwater, but we did use an approximation to the subsurface scattering caused by the bright lightning:

|

|

| No Subsurface Scattering | With Subsurface Scattering |

Since the water is defined by simple wave functions, it was possible to accurately solve the governing partial differential equations for its motion. Using d'Alembert's solution to the wave equation [7], we animated the water in a physically accurate fashion without having to numerically solve PDEs.

Although the geometries tend to look fine with no tweaking, we also decided to implement a Lightning Modeling Studio GUI to perfect the geometries before rendering. We implemented the Lightning Studio in YZ-Windows.

To get a sense of the temporal aspects of lightning storms, we watched a couple of videos of lightning (from wvlightning.com) and noticed that the geometries repeated for several seconds. We thus decided to use the same geometry for multiple flashes in our short video. Here is a lightning scientist's explanation of this effect:

In lightning science vocabulary, lightning is broken down into several parts. The initial downward discharge is called a "leader". The "return stroke" that completes the electrical current is the bright, visual channel that we see. Several return strokes can move up and down this channel many times, creating the "flickering" effect. This entire event may last half a second and is called a "flash". Outside the lightning science community, most people commonly refer to the entire, half-second lightning event as a "strike" or "bolt". [8]To speed the volume integration up for the homogeneous low-density atmospheric medium, we used an approximation to the volume rendering equation and derived a closed-form expression for the contribution of a lightning segment to the glow along a ray. This saved us the expense of marching along each ray through the expansive atmospheric volume. The mathematics of the optimization are described in this brief document (PDF).

The lightning's reflection through the clouds was accomplished through a photon map, as described above; we used uniform random sampling of the lightning to generate photon initial positions, and traced their paths through the clouds to generate the photon map. Diffuse shading calculations integrated the lightning uniformly by arc length.

Our code only supports these lights in the form of discrete line segments, but our technique is general and may easily be applied to an arbitrary 1-manifold with boundary. The linear light sources can also define color and brightness as a function on the path. An useful and relatively simple optimization to consider for the future is importance sampling the lightning's geometry when performing diffuse shading calculations. This could be accomplished by using the combined brightness and projected angle of a lightning segment as a probability distribution function.

|

|

|

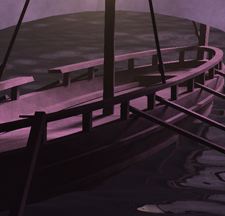

The final images were rendered at 3600 by 2700 pixels with 100 shadow rays per eye ray, and then resampled down to 1280 by 960 to reduce aliasing. The close-up image of the boat with the purple lightning took about 8 hours of rendering time, and the distant views took about 1 hour each.